The proliferation of AI has quickly launched many new software program applied sciences, every with its personal potential misconfigurations that may compromise info safety. Thus the mission of Cybersecurity Analysis: uncover the vectors explicit to a brand new expertise and measure its cyber threat.

This investigation seems to be at llama.cpp, an open-source framework for utilizing giant language fashions (LLMs). Llama.cpp has the potential to leak person prompts, however as a result of there are a small variety of llama.cpp servers within the wild, absolutely the threat of a leak resulting from llama.cpp is low.

Nonetheless, in inspecting the immediate knowledge uncovered by poorly secured llama.cpp servers, we discovered two IP addresses producing textual content for sexually specific roleplay involving characters who have been youngsters. Whereas our objective in doing this analysis was to grasp the danger related to uncovered llama.cpp servers, the actual knowledge uncovered speaks to a few of the most urgent security considerations related to generative AI.

What’s llama.cpp?

Llama.cpp is an open-source challenge for “Inference of Meta’s LLaMA model (and others) in pure C/C++.” In non-technical phrases, llama.cpp is free software program that makes it simple to start out operating free AI fashions. One of the best-known AI merchandise—like OpenAI’s ChatGPT, Anthropic’s Claude, or Google’s Gemini—use proprietary fashions accessed via an utility created by the proprietor (like what you see at chatgpt.com) or via APIs that present paid entry to the fashions. However there are additionally many free and open-source giant language fashions that customers can obtain, modify, and run in their very own environments. Llama is one household of open fashions launched by Meta and the explanation a lot AI-related software program has “llama” within the title. Initiatives like llama.cpp or Ollama present the interface to make use of these fashions.

Default llama.cpp person interface

Llama.cpp may be configured as an HTTP server with APIs that permit distant customers to work together with llama.cpp. If llama.cpp is configured to run a server and exposes its interface to the general public web, then nameless distant customers can detect the llama.cpp server and work together with it. Usually talking, it’s a unhealthy concept to reveal APIs to the web except they’re genuinely supposed for everybody on the planet to entry. Nonetheless, as a result of llama.cpp is a free and open piece of software program, it’s generally utilized by hobbyists who could not fear concerning the info safety of a toy app of their private lab.

These two options– HTTP APIs that may be made publicly accessible and generally utilized by people outdoors of company governance– improve the probability that customers will configure llama.cpp servers with less-than-secure settings.

Dangers of uncovered llama.cpp APIs

To check the real-life prevalence of these dangers, we used two llama.cpp GET APIs: `/props` and `/slots`. Llama.cpp additionally supplies varied POST APIs that we didn’t try to make use of due to the potential of disrupting server operations. For a whole safety audit of an utility utilizing llama.cpp, you also needs to guarantee these POST APIs can’t be abused.

The /props endpoint supplies metadata concerning the mannequin parameters. That is much like the info that Ollama APIs generally expose. The v1/fashions and /well being endpoints present the identical knowledge accessible via /props, separated out to be OpenAI suitable or to simplify performing a well being examine, respectively. As a result of they supply redundant knowledge, we didn’t trouble calling these endpoints.

The /slots endpoint supplies the precise textual content of the prompts together with metadata about immediate technology. If /slots is enabled and accessible with out authentication, nameless customers can learn the messages despatched to the mannequin. If anybody is utilizing the mannequin in just about any method, exposing their inputs is undesirable. The llama.cpp documentation contains this warning about /slots: “This endpoint is intended for debugging and may be modified in future versions. For security reasons, we strongly advise against enabling it in production environments.” Good recommendation, llama.cpp workforce!

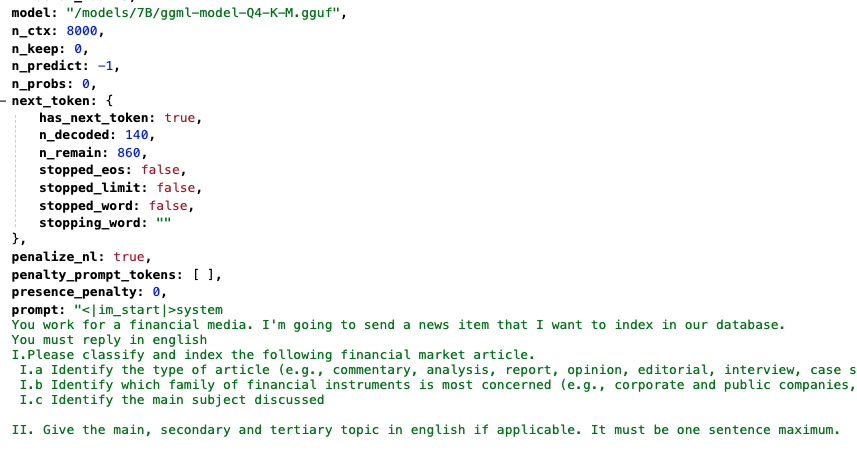

Instance of outcomes from /slots endpoint displaying promptOverall prevalence and distribution of llama.cpp

Instance of outcomes from /slots endpoint displaying promptOverall prevalence and distribution of llama.cpp

The very first thing to notice is that there are a comparatively small variety of llama.cpp servers uncovered worldwide—solely about 400 at any given time throughout our analysis in March 2025. By comparability, the variety of uncovered Ollama servers is at the moment round 20k, virtually triple what it was two months in the past. Nonetheless, the chance for nameless customers to learn prompts from llama.cpp servers makes them doubtlessly extra impactful with decrease effort to use.

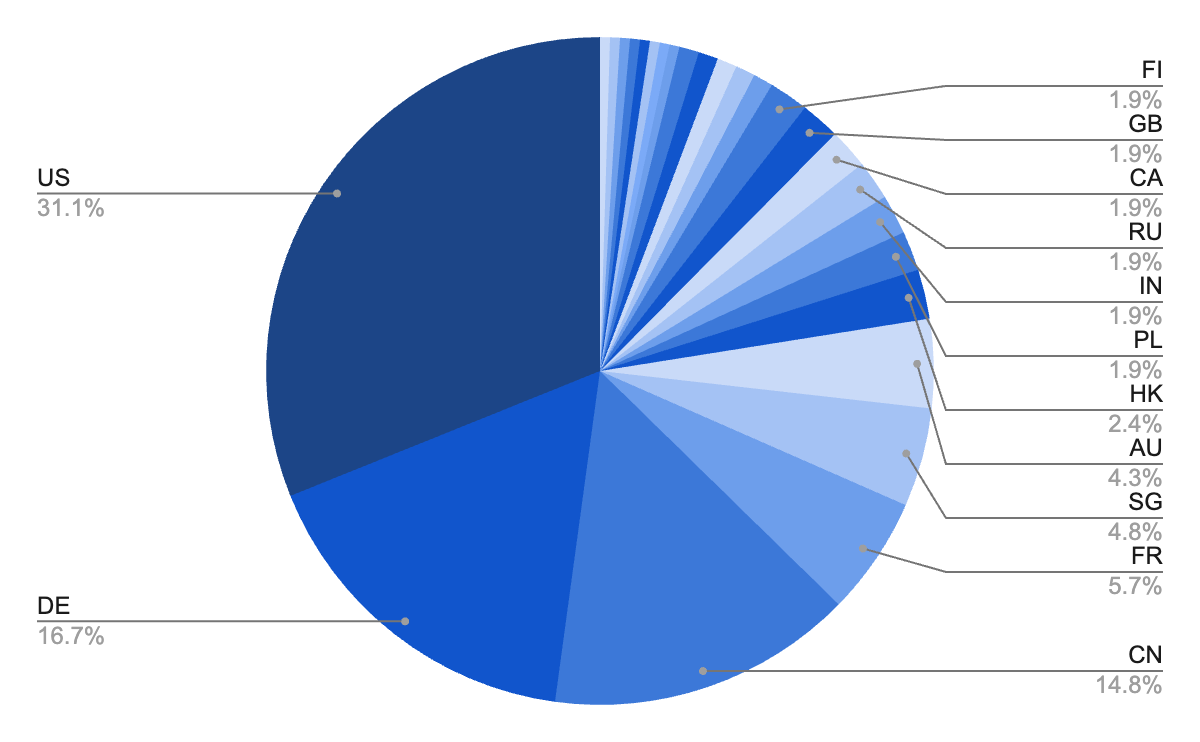

Geographically, the llama.cpp servers are concentrated within the US, with Germany and China clearly in second place. Evaluating the distribution of llama.cpp servers to Ollama, llama.cpp is proportionally much less widespread in China and extra widespread in Germany.

Distribution of llama.cpp servers displaying focus in US, Germany, and China

Distribution of llama.cpp servers displaying focus in US, Germany, and China

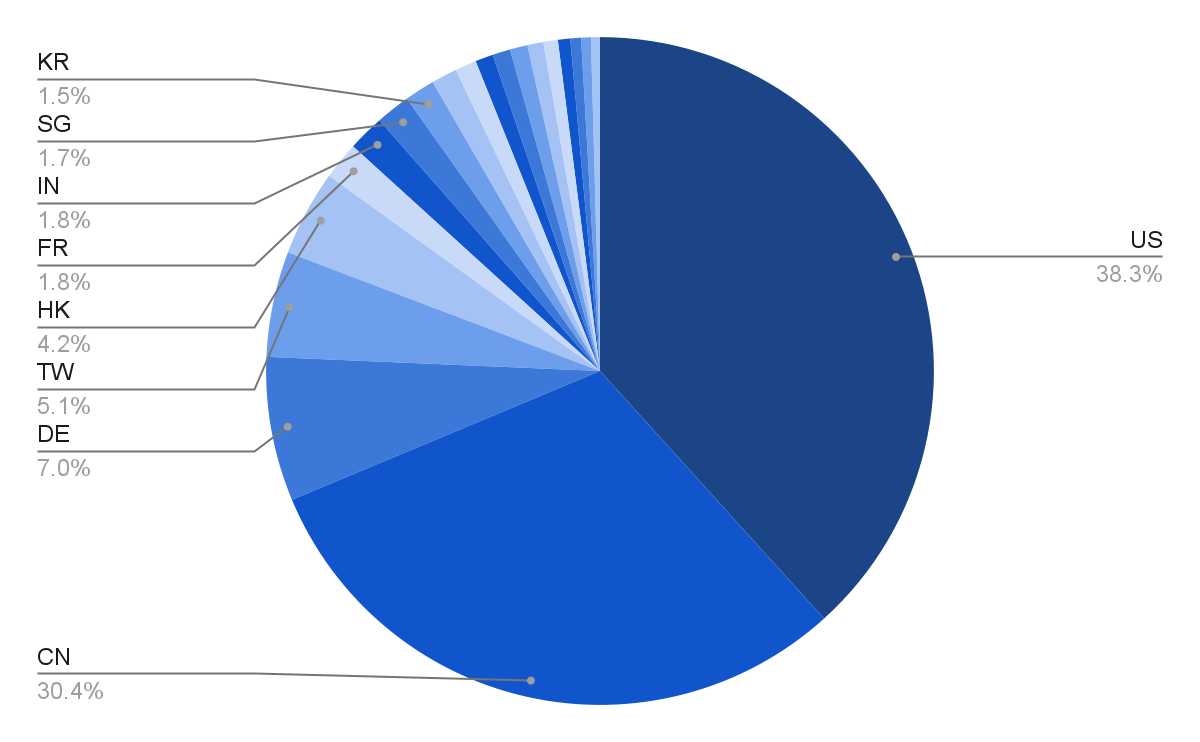

Distribution of Ollama servers displaying focus in US, China, and Germany

Distribution of Ollama servers displaying focus in US, China, and Germany

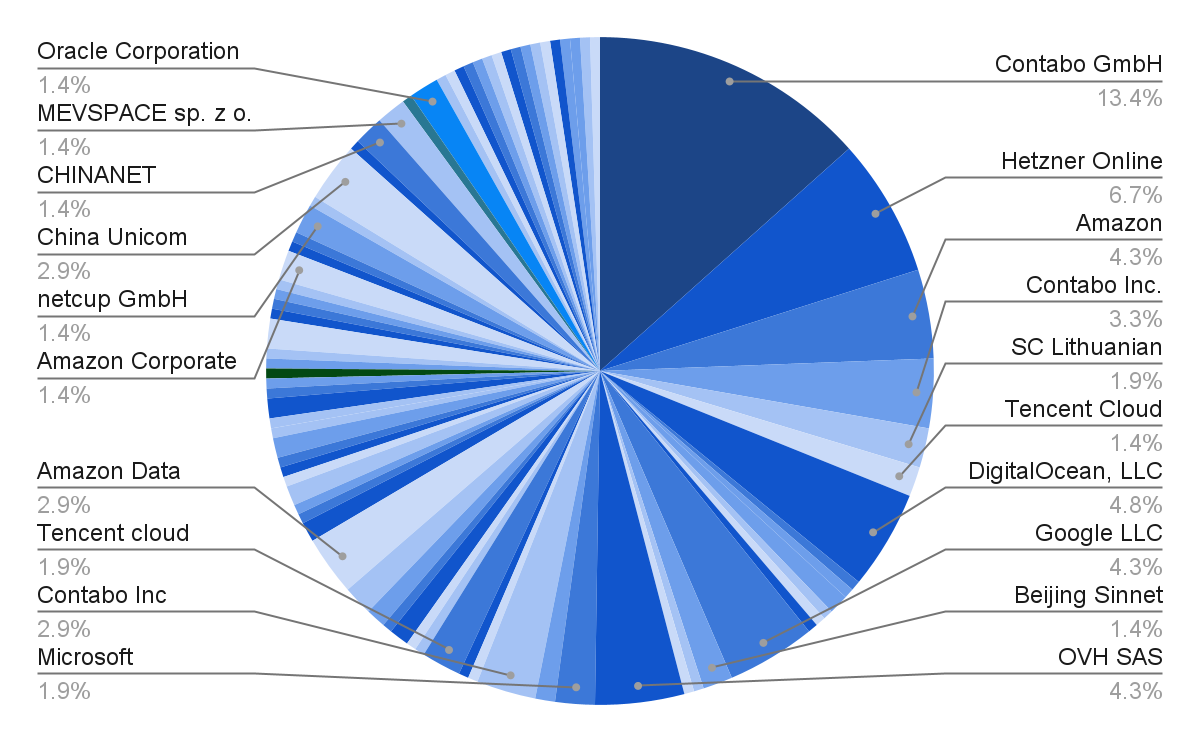

As with Ollama, the organizations proudly owning the IP addresses are diffuse, once more indicative of hobbyist customers moderately than company deployments that are inclined to cluster in main cloud suppliers like AWS, Azure, and Google.

Distribution of llama.cpp servers by IP ownerDistribution of /slots and /props endpoints

Distribution of llama.cpp servers by IP ownerDistribution of /slots and /props endpoints

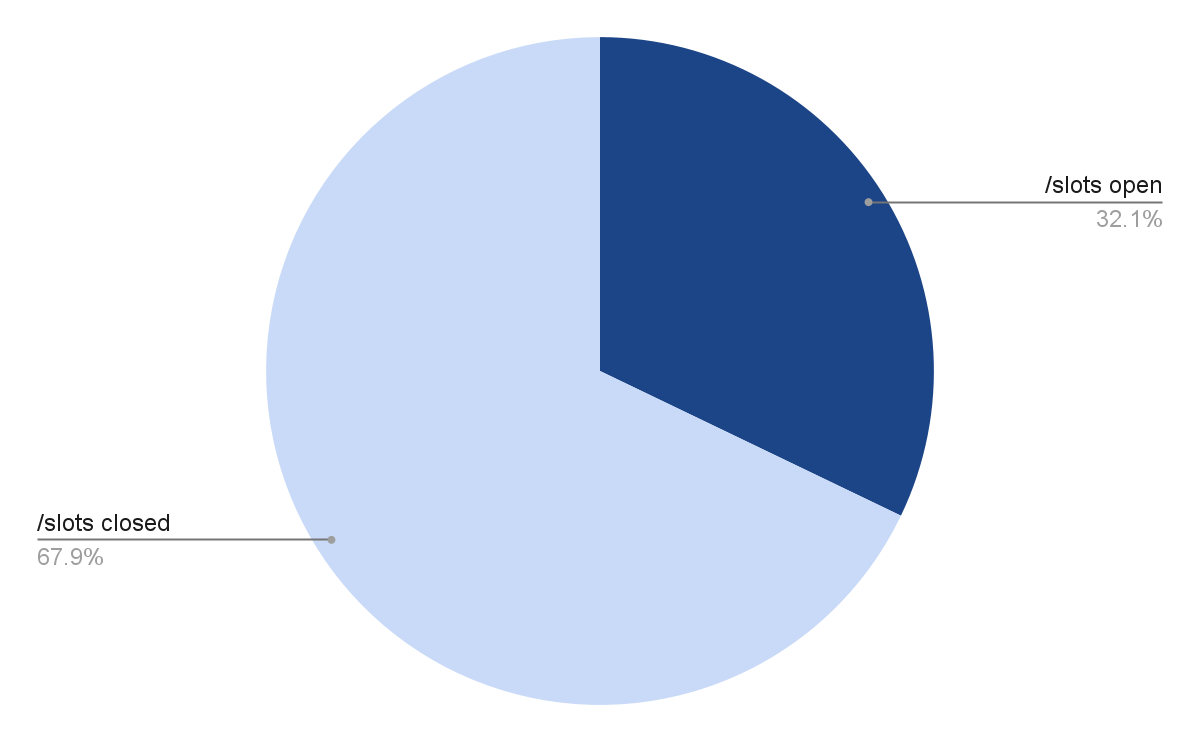

Provided that /slots exposes extra delicate info than `/props` (and is explicitly warned in opposition to within the documentation), we anticipated to search out far fewer cases of /slots than /props. Whereas that was the case, the variety of llama.cpp cases exposing their immediate knowledge was larger than anticipated—about one-third of all servers.

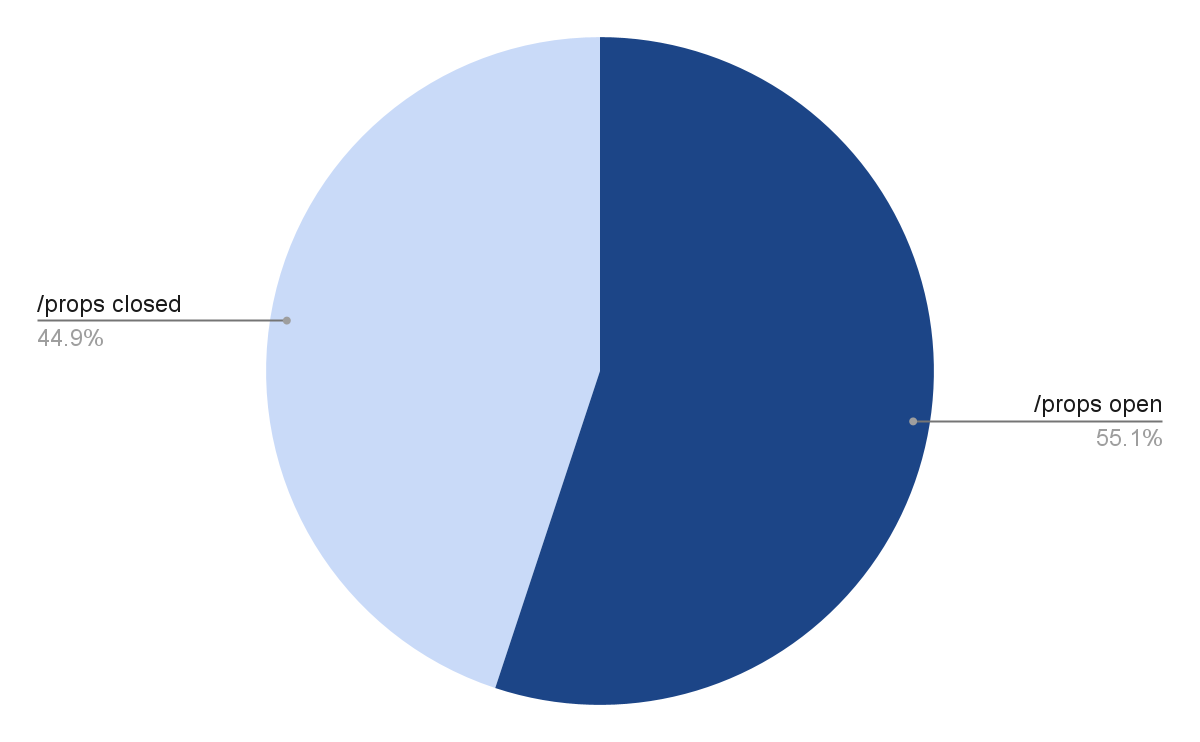

Conversely, the variety of cases exposing system properties—simply over half—is comparatively low in comparison with the 1000’s of IPs exposing related knowledge by way of Ollama APIs.

Distribution of IP addresses permitting entry to /slots endpoint

Distribution of IP addresses permitting entry to /slots endpoint

Distribution of IP addresses permitting entry to /props endpoint

Distribution of IP addresses permitting entry to /props endpoint

What’s within the prompts?

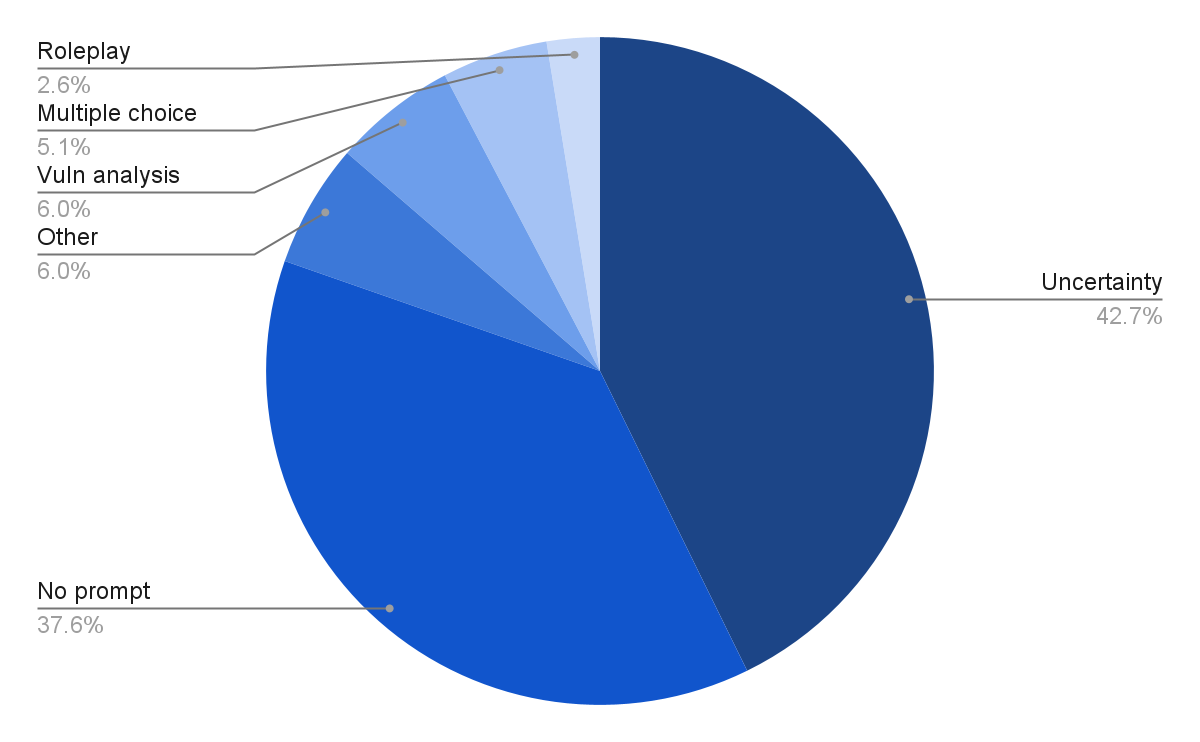

Of the 117 IP addresses exposing their prompts, only a few would qualify as “production” use. Fifty servers confirmed the final immediate as a default query for testing LLMs, “How does the uncertainty principle affect the behavior of particles?” Forty-four had no recorded prompt at all. A handful had boilerplate analyses of software vulnerabilities, identical prompts to create multiple-choice quizzes, or public documents—evidence of testing the model but not sensitive information, and not in ongoing use.

The llama servers with by far the longest prompts– the ones where the amount of content might indicate “production” use– were three chatbots with prompts for elaborate, long-running, erotic roleplays. Each of those unique IPs used a different model– Midnight-Rose-70B, magnum-72b, and L3.3-Nevoria-R1-70b– indicating they were most likely separate projects that just happened to all share a similar function.

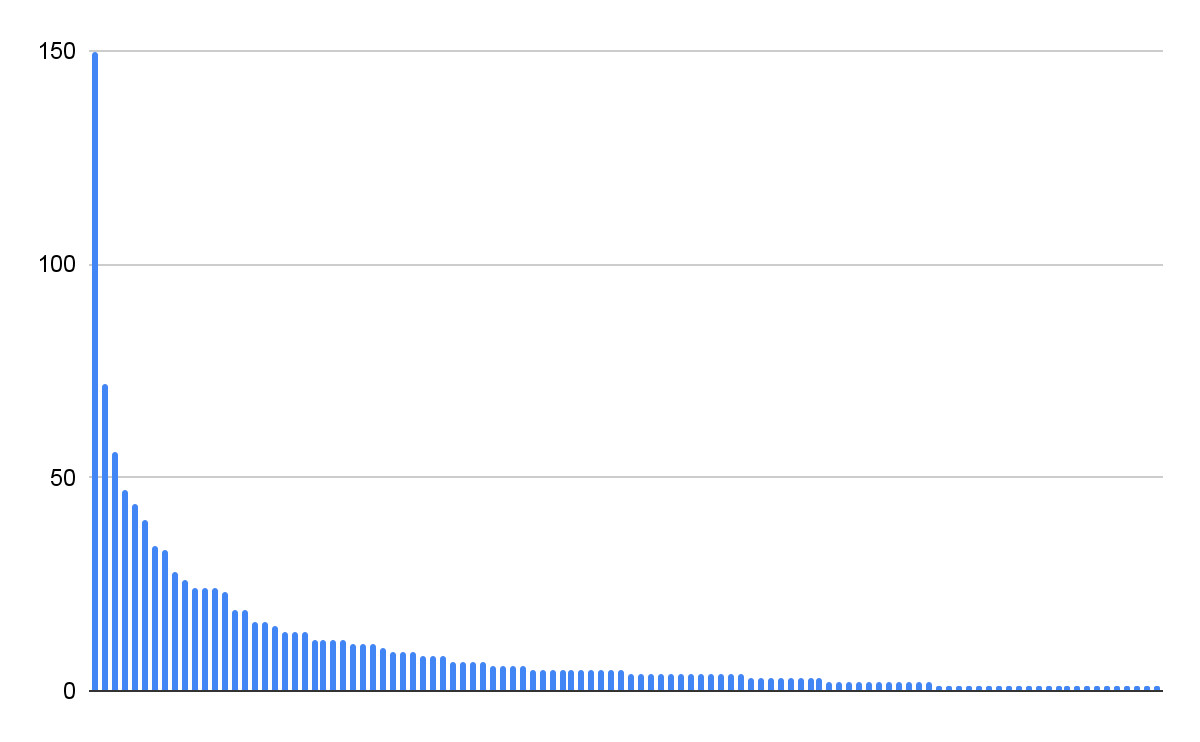

Two of those IPs were frequently updated with new prompts from different scenarios or characters. To measure their activity, we collected the prompts on those IPs from /slots once per minute for 24 hours. Using a hash of the prompt text, we then deduplicated the prompts for a total of 952 unique messages in that period, or around 40 prompts per hour. We did not submit or interact with the prompting interface, only observed publicly exposed prompts accessible via passive scanning.

After reviewing the data, the major concern was that some of the content created by those LLMs described (fictional) children from ages 7-12 having sex.

To count the number of unique scenarios, we sliced the first 100 characters from each message to get a representative sample of the system prompt for each scenario. This method identified 108 unique system prompts or narrative scenarios. The scenario with the longest chat had 150 messages within the 24-hour period. Of the 108 scenarios, five involved children.

Distribution of variety of messages per state of affairs

Distribution of variety of messages per state of affairs

Whereas this content material doesn’t contain any actual youngsters, the prevalence of AI-generated CSAM in even a slim slice of this knowledge reveals how a lot LLMs have lowered the barrier to entry to producing such content material. Furthermore, the content material isn’t just static textual content however a text-based interactive CSAM roleplay—one thing that’s repellant, if not unlawful, and which LLM expertise makes trivial to create. Certainly, there are already many websites that function as platforms for customers to create “characters” for “uncensored” roleplay in lots of of 1000’s of eventualities.

Conclusion

Info safety groups ought to pay attention to llama.cpp as a expertise that may be misconfigured and expose confidential knowledge. Cybersecurity’s cyber threat scanning detects IP addresses operating llama.cpp to forestall third-party knowledge breaches. When utilizing llama.cpp, implementers must be acquainted with safe configuration pointers, most notably not enabling the /slots endpoint. On this survey, we didn’t detect any exposures of company knowledge, however the potential exists, and infosec packages ought to proceed monitoring for such occasions.

The particulars of the info we discovered accessible via llama.cpp APIs aren’t particularly related to company info safety groups however are relevant to the broader dialogue of AI security. Empirical commentary of truly operating LLMs via analysis surveys will likely be a part of the continued challenge of understanding AI’s influence on society and what guardrails are wanted.